For the last few years, we’ve heard the same warning from every major tech CEO: Earth is running out of juice.

Between the insatiable hunger of generative AI and the massive cooling requirements of modern GPUs, terrestrial data centers have become the “climate villains” of the 2020s. We’ve tried building them under the ocean, in Arctic bunkers, and next to nuclear plants. But as we step into 2026, the industry has realized that the best way to solve Earth’s energy problem is to simply leave Earth.

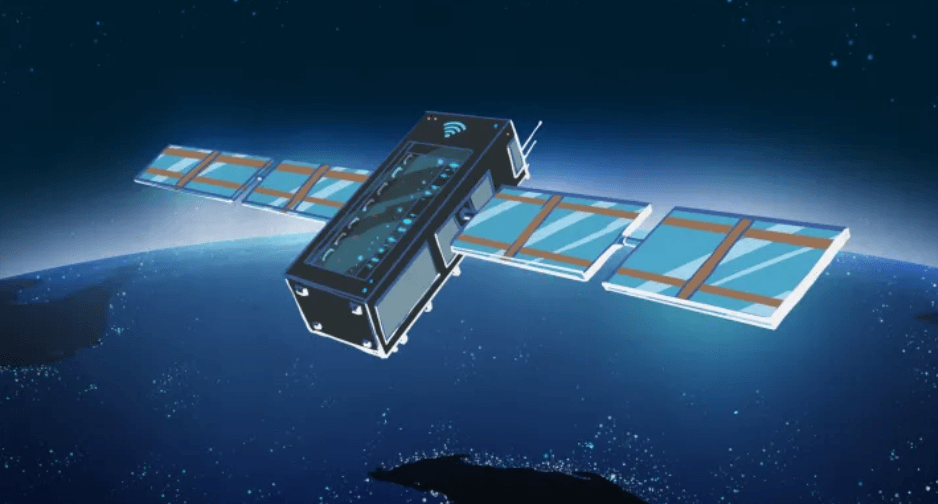

Welcome to the era of Orbital Computing. It sounds like something straight out of Interstellar, but as of this week, it is officially a commercial reality. With companies like Starcloud, Lonestar, and even Google launching hardware into the void, the “Cloud” is finally living up to its name.

Here is a deep dive into why your next AI query might be processed 500 kilometers above your head.

The “Gigawatt” Bottleneck

To understand why companies are spending millions to rocket servers into space, you have to look at the power bill on the ground.

A single top-tier AI data center in 2026 can consume as much electricity as 100,000 households. In places like Northern Virginia or Dublin, the local power grids are literally at a breaking point. We are reaching a “CFO Audit” phase where the cost of land, water for cooling, and carbon taxes is making Earth-based expansion a nightmare.

In space, the rules of physics change in our favor:

- Unlimited Solar: On Earth, solar panels are limited by weather, night cycles, and the atmosphere. In a sun-synchronous orbit, a data center can have 24/7 access to high-intensity solar radiation.

- The Ultimate Heat Sink: Cooling is the #1 expense for terrestrial centers. In space, you have a natural -270°C vacuum. While you can’t use fans (no air!), massive passive radiators can dump heat into the void with zero water consumption.

- Zero Land Tax: Space is, quite literally, infinite.

2026: The Year of the Hardware Launch

This isn’t just theory anymore. 2026 is the year the “Space Cloud” got its first heavy-duty hardware.

1. Starcloud’s H100 Orbit

In late 2025, the Redmond-based startup Starcloud (formerly Lumen Orbit) made history by successfully operating an Nvidia H100 GPU in Low Earth Orbit (LEO). This week, they confirmed that their second-generation “Micro Data Center” is scheduled for a H2 2026 launch. Their goal? To provide “GPU-as-a-Service” for other satellites, allowing them to process imagery in space rather than beaming raw data back to Earth.

2. Lonestar’s Lunar Archive

While most are looking at orbit, Lonestar Data Holdings is looking at the Moon. In February 2026, they are scheduled to land their “Freedom Data Centre” at the lunar south pole via an Intuitive Machines lander. This isn’t for high-speed AI; it’s the ultimate “Disaster Recovery” vault. If something catastrophic happens on Earth, a copy of human knowledge and your company’s critical backups—will be sitting safely in a lunar lava tube.

3. Google’s Project Suncatcher

Not to be outdone, Google Research has been quiet about Project Suncatcher. However, leaked reports suggest they are testing “close-formation” satellite clusters that use high-bandwidth laser links to create a distributed supercomputer in the sky. They aren’t building one big satellite; they are building a “swarm” of intelligence.

The “Sovereignty” Factor: Data Without Borders

There is a legal reason for this shift that many people overlook: Data Sovereignty.

When your data is on a server in Ohio, it is subject to U.S. law. If it’s in Frankfurt, it’s under the GDPR. But who owns the data sitting in a satellite 500km above international waters?

For industries like defense, high-frequency finance, and even some “unrestricted” AI research, space offers a legal “Grey Zone.” While space law (the 1967 Outer Space Treaty) says no nation can claim territory, the data on a satellite is generally governed by the country that launched it. This is creating a new market for “Sovereign Clouds” places where data can exist outside the reach of terrestrial geopolitical squabbles.

The Technical Reality Check (It’s Not All Easy)

Before we get too excited, let’s be real. Space is a “high-reliability” nightmare.

- The Radiation Problem: Cosmic rays can flip bits in a processor, causing “silent data corruption” or frying a chip entirely. Space-based data centers have to use redundant architectures and specialized shielding that adds weight and weight equals cost.

- The Latency Gap: For “real-time” gaming or high-frequency trading, the 30-50ms round-trip to orbit is too slow. Space data centers are currently best suited for asynchronous tasks: training large AI models, deep data mining, and long-term storage.

- The Space Debris Risk: Launching thousands of servers adds to the “Kessler Syndrome” risk. One stray piece of junk hitting a $50 million orbital GPU would be a very bad day for the shareholders.

The Verdict: Moving the “Brain” Off-Planet

By the end of 2026, we will likely see the first AI model trained entirely in orbit. We are moving toward a world where the “Earth” is for living, and “Space” is for the heavy lifting of civilization. Just as we moved noisy, dirty factories out of city centers in the 20th century, we are now moving the noisy, power-hungry “thought factories” out of our biosphere.

The “Cloud” was always a metaphor. In 2026, it’s finally becoming a physical reality.

What’s your take? Would you trust your most sensitive data to a server orbiting the Earth at 17,000 miles per hour, or do you prefer having your “Cloud” firmly on the ground? Let’s discuss in the comments.

Leave a Reply